Migrating Cockbox to Native ZFS Root With Encryption and Minimal Downtime

Published:

Category: Uncategorized

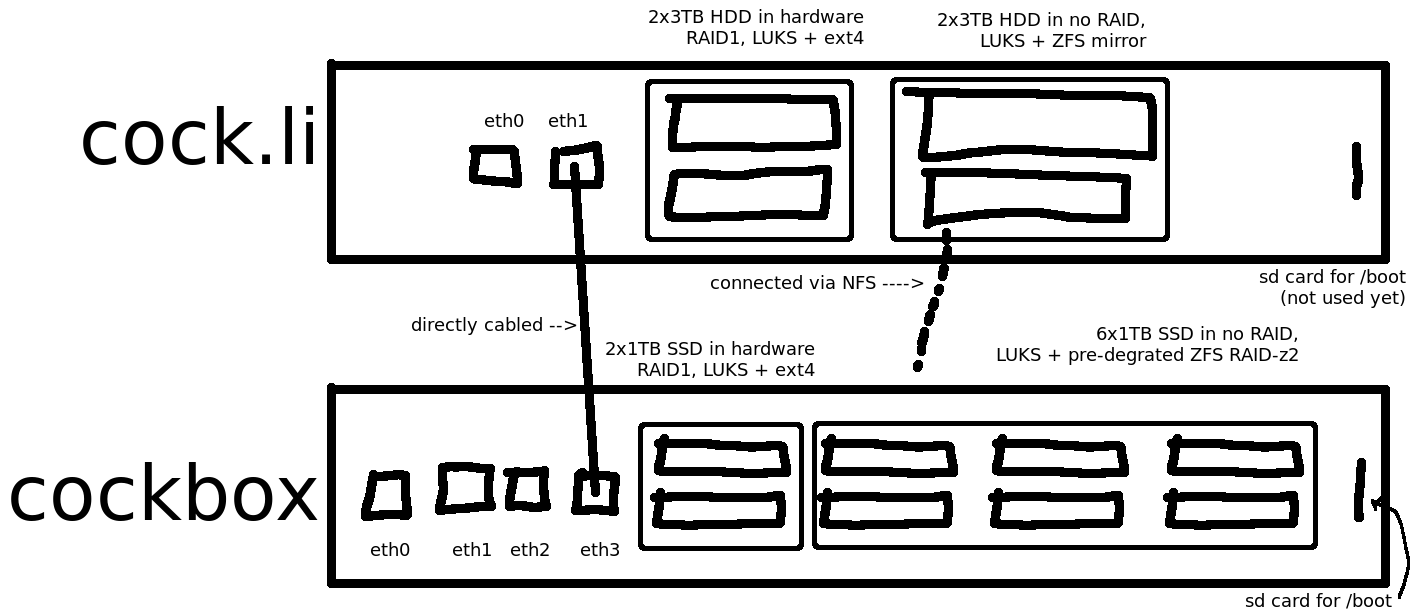

Cockbox, the VPS provider I started back in June of this year, has been operating swimmingly thus far. Unfortunately things over at cock.li haven't been so great. I learned the hard way that hardware-based RAID is the devil, but that's a post for another day. The problem here is that Cockbox is also using hardware-based RAID. Right now everything is stored on 2x1TB disks in RAID-1. There's a difference between performance being shit (we're talking 60s+ I/O hangups) during a several-day rebuild on a meme sewer that you can tell people to deal with it, and an actual VPS provider where some geniuses have decided to pay real money for a service I'm providing. So the hardware RAID has to go. And the solution is ZFS.

Thankfully, this tied into a hardware upgrade nicely. Pre-migration cockbox had 2x1TB SSDs in RAID-1 (ext4 root) and 6x1TB uninitialized SSDs (yeah we got SSDs on deck son we ballin'). We also have 488GB of image files on the ext4 root which contain super critical VPS images that we can't lose no matter what.

The goal: 8x1TB SSDs all individually encrypted with LUKS, with RAID-Z2 running on top hosting Debian+Xen. There is also an SD card with houses /boot and an encrypted partition housing a keyfile for the 8 SSDs.

Here's the hardware pre-migration:

I'll be initializing the 6 disks as a pre-degraded 8-disk array. Once we're booting with ZFS natively we can crack open that hardware RAID and attach the 2 remaining disks to the ZFS pool. It would not be wise, however, to move all of the VM images onto an array with no redundancy, so we'll be using 2x3TB disks on the cock.li server for an interim storage using NFS.

WARNING: this is not meant to be a tutorial but more like a technical writeup. I left some stuff out of here, if you want to do something like this yourself, this is a pretty good resource.

Setting up the encryption

I'm using an SD card to hold both /boot and an encrypted partition containing a keyfile. So we set up encryption like this:

# cryptsetup luksFormat /dev/sdb2 # cryptsetup luksOpen /dev/sdb2 crypt_keyfile # dd if=/dev/urandom of=/dev/mapper/crypt_keyfile # /lib/cryptsetup/scripts/decrypt_derived crypt_keyfile > /tmp/key

# cryptsetup luksFormat /dev/sdc /dev/mapper/crypt_keyfile # cryptsetup luksOpen /dev/sdc zfs_disk2 # cryptsetup luksAddKey /dev/sdc /tmp/key --key-file /dev/mapper/crypt_keyfile (repeat for sdd-sdh)

We need to add that key because cryptsetup apparently doesn't work with keyfiles. More on that later.

Creating the pre-degraded array

Since I don't have all 8 disks available, I had to create the zfs pool in a pre-degraded state. This was done by using sparse files in place of the other 2 disks, then removing them once the array is created. There's no redundancy at this point, but there's 2 missing disks waiting to be replaced.

# dd if=/dev/zero of=./zfs-sparse0.img bs=1 count=0 seek=1000169234432 # dd if=/dev/zero of=./zfs-sparse1.img bs=1 count=0 seek=1000169234432

(1000169234432 is found from the fdisk -l output of the crypt devices)

# zpool create -f rpool raidz2 /root/zfs-sparse0.img /root/zfs-sparse1.img /dev/mapper/zfs_disk2 /dev/mapper/zfs_disk3 /dev/mapper/zfs_disk4 /dev/mapper/zfs_disk5 /dev/mapper/zfs_disk6 /dev/mapper/zfs_disk7 # zpool export rpool # rm zfs-sparse* # zpool import -a

# zpool list NAME SIZE ALLOC FREE EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT rpool 7.25T 971M 7.25T - 0% 0% 1.00x DEGRADED -

At this point debian was installed using debootstrap, then a kernel, bootloader (grub2), ZFS, and cryptsetup was installed as well. The system won't boot yet though, because it doesn't know how to decrypt the drives on boot!

Decrypting the drives on boot

First, we need to copy that decrypt_derived keyfile from earlier. In a chroot:

# mkdir /etc/initramfs-tools/scripts/luks # cp /lib/cryptsetup/scripts/decrypt_derived /etc/initramfs-tools/scripts/luks/get.root_crypt.decrypt_derived

The script needs to be edited a bit to grab the correct device, see the link at the top of this post for more on that.

Now, we need to tell initramfs which disks to decrypt on boot, and in which order. In /etc/initramfs-tools/conf.d/cryptroot:

target=crypt_keyfile,source=UUID=(uuid),key=none target=zfs_disk2,source=UUID=(uuid),keyscript=/scripts/luks/get.root_crypt.decrypt_derived target=zfs_disk3,source=UUID=(uuid),keyscript=/scripts/luks/get.root_crypt.decrypt_derived target=zfs_disk4,source=UUID=(uuid),keyscript=/scripts/luks/get.root_crypt.decrypt_derived target=zfs_disk5,source=UUID=(uuid),keyscript=/scripts/luks/get.root_crypt.decrypt_derived target=zfs_disk6,source=UUID=(uuid),keyscript=/scripts/luks/get.root_crypt.decrypt_derived target=zfs_disk7,source=UUID=(uuid),keyscript=/scripts/luks/get.root_crypt.decrypt_derived

No, /etc/crypttab doesn't matter here. This is the bee's knees, and running update-initramfs -u -k all will update the initramfs with the new script and decryption configuration.

Copy the VMs over to NFS

This is done with a bash script, transfer.sh:

#!/bin/bash

VM=$1

if [ -z $1 ]

then

echo "you need to specify a vm you dumb idiot"

exit 1

fi

if [ ! -f /etc/xen/$VM.cfg ]

then

echo "the vm config file doesn't even exist"

exit 1

fi

echo "transferring vm $VM"

sed -i.bak 's,/srv/xen/domains/,/srv/nfs/domains/,' /etc/xen/$VM.cfg

echo "destroying VM"

xl destroy $VM

echo "copying VM"

cp -r /srv/xen/domains/$VM /srv/nfs/domains/

echo "launching VM"

xl create /etc/xen/$VM.cfg

echo "das it mane"

Transferring back was done with the same script, just different directories and was named hollaback.sh.

Testing the ZFS root

Luckily, since xen is installed and running already, I was able to configure the SD card and 6 SSDs as physical devices attached to an HVM, and then use VNC to connect and verify everything was running fine.

This all happened with under an hour of total downtime, and that includes a trip to the datacenter to install the SD cards (which are located inside of the server). Now, cockbox is fully prepared to handle up to 180 1GB servers, I just need to wait for my purchase of 256 IP addresses to drop :)